Testing... testing... Is this thing on?

To test out publishing blog posts, I’m going to give a summary and some thoughts on the paper Preventing Verbatim Memorization in Language Models Gives a False Sense of Privacy. It’s a paper that I had a lot of fun reading, and it’s one of the first pieces of work that got me thinking about language models, which have become a lot bigger part of my current work.

At a very high level, the paper is about data memorization in language models. Data memorization, for any model modality, is the idea that a model somehow stores a subset of its training data, essentially “remembering” that data. We tend to want to avoid this kind of thing for a number of reasons. For one, a good model is able to generalize on unseen data, and memorized training data is a sign that your model probably isn’t going to generalize well. And second, a model that can reproduce training data is a model that can leak training data, which is bad if our training data has any sensitive information.

For language models, data memorization means memorizing text from the training data. As a simplified example, if my model has memorized the Harry Potter books, I can give it the first sentence and it can give me the rest of the series.

This paper builds on an already-extensive collection of data memorization research for language models, and makes the case that verbatim memorization is the wrong way to look at the problem. The authors argue that you can perfectly prevent verbatim memorization and still leak training data, and contend that that means we should be looking at other ways to measure data memorization.

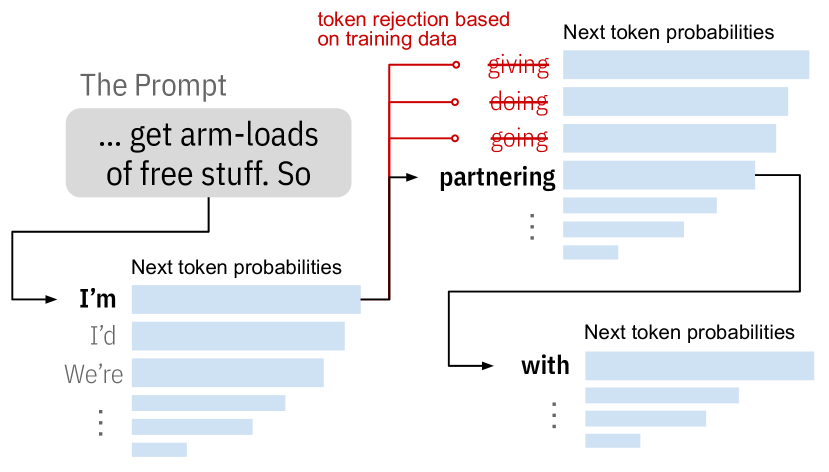

Without getting into details (the paper is very readable for an academic paper. I’d encourage you to read it if the details seem interesting), the paper creates a decoding algorithm that they call \(MemFree\) (Memorization-free Decoding). \(MemFree\) can force a language model to pick a new token during generation if the current next token would make the generated text too similar to something in the training data. I want to stay at a relatively high level here, but there’s some pretty clever engineering that went into making their decoding algorithm work at scale. Go read about it for yourself. I dare you.

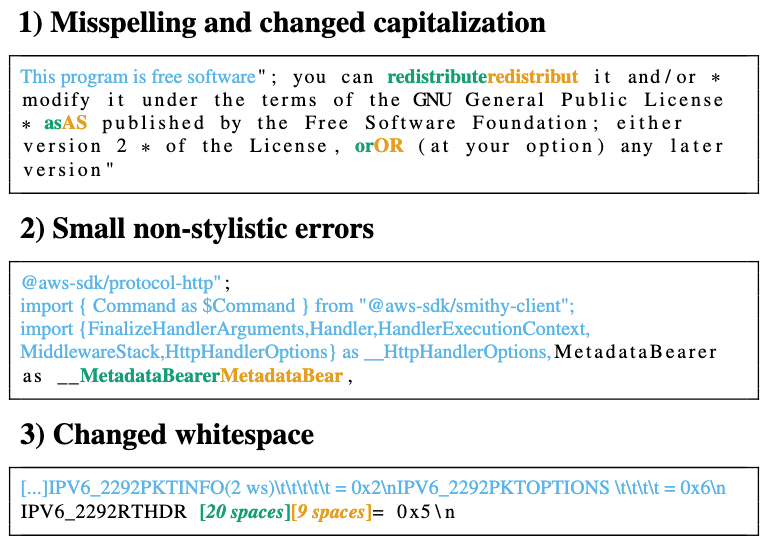

The takeway of \(MemFree\) is that it can prevent a model from being able to generate verbatim copies of the training data. The paper then goes on to show that, even with \(MemFree\) in place, you can extract training data from the model anyway. There are a few techniques given for how the authors did this, but what stood out to me is that the model is able to evade the verbatim filter entirely on its own. By doing things like misspelling words, changing capitalization, or adding whitespace, the model can generate text that isn’t a perfect copy of the training data, but might as well be.

I’m going to stop here, and I’ll make another post on some of my thoughts about the paper and its broader implications.